Introduction to Docker

DOCKER

Docker is an open platform for developing, shipping and running applications. Docker enables us to separate our infrastructure so we can deliver software quickly. Docker is a software platform that allows us to build, test, and deploy applications quickly.

Docker packages software into standardized units called containers that have everything the software needs to run including libraries, system tools, code, and runtime. Using Docker, we can quickly deploy and scale applications into any environment and know our code will run. The platform eliminates the need for mundane and recurring configuration tasks across the development lifecycle for rapid, convenient, and highly portable cloud and desktop application development. The extensive end-to-end platform provided by Docker consists of different modules like user interfaces, application programming interfaces, command-line interface, and security mechanisms. These modules work in collaboration with each other throughout the delivery lifecycle of an application to provide a consistent development platform for the developers.

Virtual Machines (VMs)

Virtual machines simulate an entire computer system within a host operating system or hypervisor. Each VM runs its own instance of a guest operating system, complete with its own kernel, memory, storage, and network interfaces. This allows for running multiple operating systems and applications simultaneously on a single physical machine. VMs provide strong isolation between different virtualized environments, making them suitable for scenarios where full operating system separation and compatibility are required. However, VMs can be resource-intensive due to running multiple operating systems and may take longer to start compared to Docker containers.

Docker over VMs

When comparing Docker to traditional virtual machines (VMs), there are several compelling reasons why Docker has gained widespread popularity. Here are some advantages of using Docker:

Lightweight and efficient: Docker containers are lightweight because they share the host operating system's kernel, eliminating the need for a separate guest operating system for each container. This results in faster startup times, reduced resource consumption, and higher density of containerized applications on a single host compared to VMs.

Faster deployment: Docker containers are designed for fast and efficient application deployment. They encapsulate all the necessary dependencies and libraries required for an application to run, ensuring consistency across different environments. This eliminates compatibility issues and significantly speeds up the deployment process.

Scalability: Docker provides easy scalability, allowing you to quickly scale up or down the number of containers based on the demand for your application. It enables horizontal scaling by distributing the workload across multiple containers, which can be easily managed using orchestration tools like Docker Swarm or Kubernetes.

Isolation and security: Docker containers provide a high level of isolation between applications, ensuring that processes running within a container are isolated from each other and from the host system. This enhances security by reducing the attack surface and minimizing the impact of potential vulnerabilities.

Portability and consistency: Docker containers encapsulate the entire runtime environment, including the application code, dependencies, and configuration. This makes them highly portable, allowing you to run the same containerized application on different machines with consistent behavior. It also facilitates collaboration among developers, as they can work with the same containerized environment across different stages of the software development lifecycle.

Overall, Docker's lightweight nature, faster deployment times, scalability, isolation, and portability make it a preferred choice for many developers and organizations seeking efficient and streamlined application deployment and management.

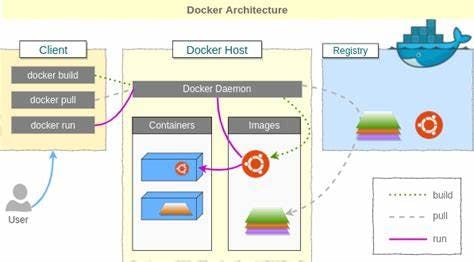

Docker Architecture

Docker's architecture consists of several components working together. Let's take a closer look:

Docker Daemon (Docker Engine): The Docker daemon, also called Docker Engine, is a background service that runs on the Docker host. It is responsible for managing the lifecycle of Docker containers. The daemon receives commands from the Docker client, interacts with the host operating system, and executes container-related tasks like creating, starting, stopping, and deleting containers. It manages the container runtime, networking, storage, and other core functionalities of Docker.

Docker Client (Docker CLI): The Docker client is a command-line interface (CLI) tool that allows users to interact with the Docker daemon. It provides a user-friendly interface to issue commands and manage Docker resources. With the Docker client, users can build, run, stop, and manage containers, images, networks, and volumes. The client communicates with the Docker daemon through a REST API or a UNIX socket, sending requests and receiving responses.

Docker Host: The Docker host refers to the physical or virtual machine where the Docker daemon runs. It provides the underlying infrastructure and resources for running Docker containers. The host operating system hosts the Docker daemon and manages the hardware resources like CPU, memory, disk, and network interfaces. Containers run directly on the Docker host's kernel, making them lightweight and efficient.

Docker Hub/Registry: Docker Hub is a publicly available registry maintained by Docker Inc. It serves as a central repository for Docker images. Users can search for, pull, and push Docker images to Docker Hub. It contains a vast collection of pre-built images that can be used as base images for application containers. In addition to Docker Hub, organizations can set up their private Docker registries to store and distribute custom images within their infrastructure.

Docker Image: A Docker image is a read-only template or snapshot that contains the necessary files, libraries, dependencies, and configuration required to run a specific application or service. Images are built using a declarative file called a Dockerfile, which specifies the instructions to assemble the image layer by layer. Each layer represents a modification or addition to the previous layer, resulting in a lightweight and immutable image. Docker images are portable, versioned, and can be shared across different Docker hosts and environments.

Docker Container: A Docker container is a lightweight, standalone, and executable runtime instance of a Docker image. Containers are created from Docker images and run as isolated processes on the Docker host. Each container has its own isolated filesystem, network stack, and process space. Containers provide an isolated and reproducible runtime environment for applications, ensuring consistency across different deployment environments. Multiple containers can be created and run from the same image, each operating independently.

In summary, Docker architecture consists of the Docker daemon managing containers on the Docker host, the Docker client interacting with the daemon to issue commands, the Docker Hub/Registry serving as a central repository for Docker images, Docker images as the templates containing application code and dependencies, and Docker containers as the runtime instances of Docker images.

Advantages of Docker

Here are some key advantages of using Docker for application deployment:

Docker allows multiple applications with different requirements and dependencies to be hosted together on the same host, as long as they have the same operating system requirements.

It optimizes storage, as containers are usually a few megabytes in size and consume very little disk space, enabling a large number of applications to be hosted on the same host.

Docker containers are robust, consuming minimal memory compared to virtual machines, resulting in faster bootup times (just a few seconds) compared to virtual machines, which may take minutes to start.

Docker reduces costs by being less demanding in terms of hardware requirements.

Docker provides a consistent environment across different systems, ensuring that applications run the same way regardless of the underlying infrastructure.

Thank you for reading!!

Amrit Subedi.